Key highlights

- Understand what the wget command does and why it’s popular for downloading files from the web.

- Install wget easily on Linux, MacOS and Windows systems with simple package commands.

- Use wget syntax and commands to download a file, multiple files or entire websites.

- Control download speed, retries and background processes to optimize performance and reduce server load.

- Leverage Bluehost VPS hosting to run wget commands efficiently in a secure, high-performance server environment.

Ever wished you could download files from the internet without opening a browser? That’s where the wget command comes in.

This simple yet powerful tool helps you fetch single files, multiple files or even entire websites directly from the command line.

If you’ve ever wondered ‘what does wget do?’ or struggled with ‘wget: command not found’ error, this guide is for you.

We’ll show you how to use Wget, explain wget syntax, share real wget examples and fix common errors along the way.

By the end, you’ll learn to use wget for downloading, automation and troubleshooting.

Let’s learn!

TL;DR: wget command (2025)

- Use case: Download files or entire websites from the command line

- Works on: Linux, macOS, Windows

- Common flags: -O rename, -c resume, -r recursive

- Fix errors: “command not found” → install with apt, yum or brew

- Best setup: Run wget on Bluehost VPS with root access & SSH

What is a wget command?

The wget command is a free utility that lets you download files directly from the Internet using the command line.

It supports HTTP, HTTPS and FTP protocols, making it flexible for handling different types of downloads from remote servers.

Wget is popular in Linux, but it also works on macOS and Windows, making it widely available across systems.

Unlike web browsers, wget can resume interrupted downloads, download multiple files and even mirror entire websites for offline viewing.

In short, wget provides a reliable and scriptable way to manage downloads without relying on a graphical interface.

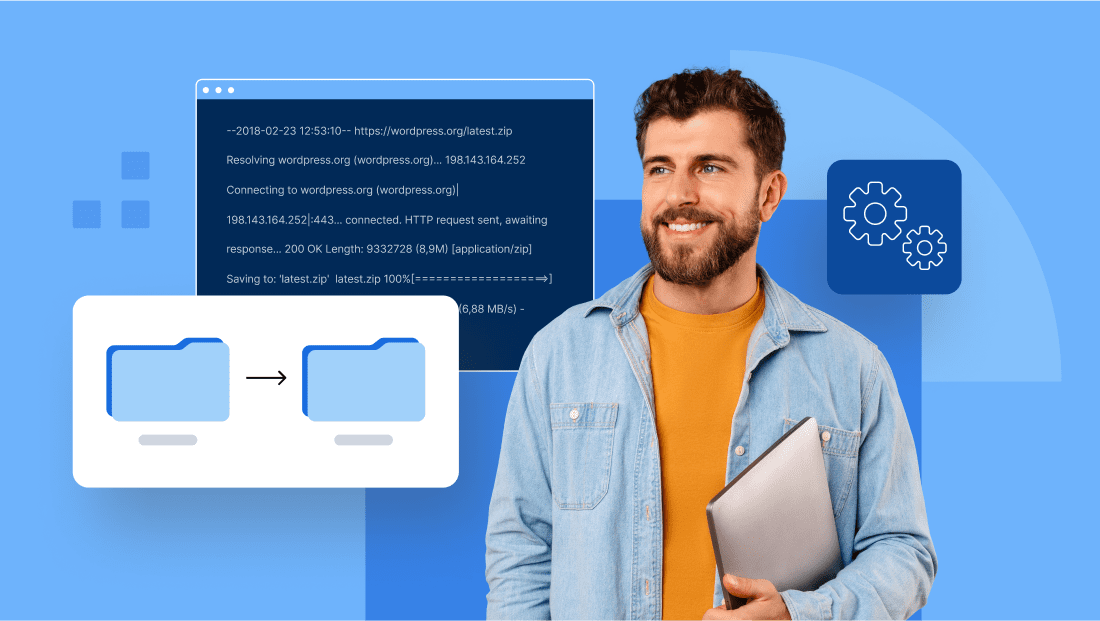

How does Wget work?

Wget works by sending HTTP, HTTPS or FTP requests to a remote server and saving the response as a local file.

Here’s how the wget command operates step by step:

- Send a request: Wget connects to a remote server using HTTP, HTTPS or FTP protocols.

- Download the file: It saves the requested file in your current working directory.

- Handle different modes: You can download a single file, multiple files or entire websites with recursive downloading.

- Resume downloads: If the connection drops, wget can resume interrupted downloads instead of starting over.

- Work with networks: It handles unstable network connections and large files more reliably than browsers.

This makes wget a powerful command-line utility for automating downloads at scale.

How to install Wget?

You need to install wget before using it on your system.

Most Linux distributions include wget by default, but if the wget command is not found, you can install it manually.

Here’s how to install wget on different operating systems:

Ubuntu & Debian

- Run this command:

sudo apt update

sudo apt install wget -y - Wget package installs quickly on these Linux distributions.

CentOS/RHEL & Fedora

- Use the following command:

sudo yum install wget -y - On Fedora, replace ‘yum’ with ‘dnf’.

macOS & Windows

- macOS: Install Wget using Homebrew:

brew install wget - Windows: Use a package manager like Chocolatey:

choco install wget - On Microsoft Windows, you can also download binaries from GNU Wget.

Once installed, you can run wget from the command line. To confirm, type:

wget --version This verifies wget is installed and ready to use. Once installed, you can run the Linux wget command directly from your terminal for reliable downloads.

Note: On Windows, you may need to add Wget to your system PATH after installation. This ensures you can run wget from any Command Prompt or PowerShell window without specifying the full directory path.

If you’re running these commands on a server, we make shell access simple on our VPS plans at Bluehost.

With secure SSH access, you can run wget commands directly on your server without hassle. A VPS is ideal for wget because it delivers stability, control and reliable performance.

When you use wget for large downloads or website mirroring, you need dependable resources. Shared hosting may not provide enough flexibility or speed.

Our VPS hosting solves this with isolated resources, full root access and secure management options designed for advanced tasks.

With Bluehost VPS hosting, you can:

- Gain full root access: Install Wget, cron jobs and scripts without restrictions.

- Use dedicated resources: Enjoy faster download speeds and consistent performance.

- Run secure SSH sessions: Manage downloads and updates safely from anywhere.

- Automate tasks: Schedule recurring wget jobs with ease.

- Scale easily: Upgrade resources to handle bigger downloads or automation.

Bluehost VPS hosting provides the speed, security and control necessary for advanced wget tasks.

Power your wget tasks with a hosting plan built for speed and security. Explore Bluehost VPS hosting today.

Wget syntax

The wget command follows a simple structure. You enter the command, choose options and then provide the target URL.

Basic form: Wget [options] URL

The basic syntax in 2025 looks like this:

wget [options] [URL] - Wget: Calls the wget command line utility.

- [options]: Flags that control how wget runs.

- [URL]: The location of the file or website you want to download.

This simple form makes it easy to use wget to download a file or multiple files.

Common flags by goal

Wget supports many command-line options.

Here are the most useful ones:

| Flag | Use | Example |

| -O | Save with new filename | wget -O new.zip URL |

| -c | Resume download | wget -c URL |

| -b | Run Wget in the background | wget -b URL |

| -r | Mirror website | wget -r URL |

| –limit-rate | Limit speed | wget –limit-rate=200k URL |

| –tries | Set retry attempts if download fails | wget –tries=5 URL |

- -O [output file]: Save with a custom file name.

- -c: Resume interrupted downloads without restarting.

- -b: Run wget in the background.

- -r: Enable recursive downloading for entire websites.

- –limit-rate=[speed]: Limit the download speed to avoid server load.

- –tries=[number]: Set retry attempts if the download fails.

These wget commands give you control over downloads and allow you to adapt to various scenarios.

Quick start: How to use wget to download a file?

You can use the wget command to download a file quickly with a single command line instruction. If you’re wondering how to use wget to download a file, here’s the simplest example.

Download a single file

- Run this command:

wget https://[example].com/file.zip - Saves the file in your current working directory.

- Overwrites an existing file with the same name unless you specify a different output file.

This shows how you can use wget to download files directly from the internet with a single command.

Save with a different name

wget -O newfile.zip https://[example].com/file.zip - The -O flag changes the output file name.

Save to a specific directory

wget -P /home/user/downloads https://[example].com/file.zip - The -P flag allows you to save it in a different folder instead of the current directory.

Pipe output to another command

wget -O - https://[example].com/file.zip | tar -xz - Sends Wget’s downloaded output to another program for processing.

These commands show how to use wget to download a file and manage the saved result with simple flags.

Download multiple files

The wget command makes it easy to download multiple files simultaneously from the command line.

Multiple URLs in one command

wget https://[example].com/file1.zip https://[example].com/file2.zip Downloads multiple files at once using separate URLs.

Use a list file

wget -i urls.txt - The -i option reads an input file containing a list of URLs.

- This is useful for downloading from an external file or batch jobs.

Download numbered or sequence files

wget https://[example].com/file{1..5}.zip - Expands the sequence and downloads the necessary files quickly.

You can also save downloaded data into local files for easier management later. Whether you download two files or hundreds, knowing how to use wget to download a file makes it easy to scale into bulk tasks.

Control and optimize downloads

The wget command gives you control over speed, retries and background tasks for efficient file management.

Resume interrupted downloads

wget -c https://[example].com/largefile.zip - The -c option lets you resume interrupted downloads without restarting from scratch, which is especially useful on Linux systems.

On most servers, the wget command in Linux works seamlessly for large files, making resuming much faster than restarting downloads.

Limit download speed

wget --limit-rate=200k https://[example].com/file.zip - Use –limit-rate to limit download speed, a handy way to manage bandwidth and reduce server load in 2025.

Set retries and timeouts

wget --tries=5 --timeout=30 https://[example].com/file.zip - Adjust retry attempts and timeouts to handle unstable network connections.

Run in background and view logs

wget -b https://[example].com/largefile.zip - The -b flag runs wget in the background, creating a log file for easy monitoring of downloads.

Work with servers and protocols

The wget command supports different servers and protocols, making it flexible for various download tasks.

Download via FTP

wget ftp://[example].com/file.zip - Wget supports FTP protocols, letting you download files directly from a remote server.

Access password-protected servers

wget --user=username --password=secret ftp://[example].com/file.zip - Use your username and password to access secured downloads from remote servers.

Change user agent and add custom headers

wget --user-agent="Mozilla/5.0" https://[example].com/file.zip - This helps when a remote server requires specific headers or blocks default wget requests.

Use proxies and cookies

wget --proxy=on --load-cookies=cookies.txt https://[example].com/file.zip - Wget works with HTTP proxies and cookies, just like modern web browsers.

Handle SSL certificate issues (safely)

wget --no-check-certificate https://[example].com/file.zip - This skips SSL checks, though it’s safer to verify certificates when downloading necessary files.

Advanced wget examples

The wget command includes powerful options for advanced use cases like mirroring sites, filtering downloads and checking links.

Mirror a website (recursive download)

wget -r -np -k https://[example].com - The -r flag enables recursive downloading, letting you download an entire website with wget recursively for offline viewing.

You can also use wget to download file archives during a full mirror, ensuring your offline site includes all necessary resources.

Download only updated files (timestamping)

wget -N https://[example].com/file.zip - The -N flag downloads only updated files, reducing unnecessary server load.

Filter by file type or pattern

wget -r -A pdf,docx https://[example].com/docs/ Filters downloads by extension, ensuring only downloaded documents like PDFs or Word files are saved.

Check links only (site health)

wget --spider -r https://[example].com - The –spider option checks links without downloading files, useful for testing large websites.

Automate downloads

The wget command can automate recurring tasks, making the download process faster and more reliable.

Schedule recurring jobs with cron

0 2 * * * wget -i urls.txt - Use cron on Linux to schedule wget jobs for automatic downloads at specific times.

Loops and ranges for bulk downloads

for i in {1..10}; do wget https://[example].com/file$i.zip; done - Combine wget with shell loops to automate downloading multiple files.

Logging and exit codes

wget -o wget-log https://[example].com/file.zip - Creates a wget log file for every download process.

- You can also enable debug output for troubleshooting.

- Exit codes help verify success or failed downloads in scripts.

Automating wget with these command-line options gives you control, repeatability and a clean directory structure for downloaded files.

Common wget errors and troubleshooting

Even with the correct wget commands, you may encounter errors while working from the command line.

Troubleshooting these problems helps you understand what does wget do, how to fix failures and how to keep downloads running smoothly.

1. Fix “wget command not found”

The error wget command not found appears when the utility is missing or incorrectly configured.

On Linux, you can run the following command:

sudo apt install wget -y This installs the wget package and ensures that wget is installed correctly.

If you have already tried to use wget command and it failed, reinstalling often fixes path issues. Windows users should download binaries from GNU Wget.

2. SSL and certificate errors

Some remote websites block insecure HTTP requests, which can cause SSL-related problems.

You can bypass them with the following command:

wget --no-check-certificate https://[example].com/file.zip However, it’s safer to verify certificates. If you use wget HTTPS, make sure your system libraries are updated.

On Arch Linux, missing SSL packages may prevent you from running the wget command.

3. 403/404, redirects and authentication

Authentication issues can stop downloads.

To fix this, create a .netrc file or pass your username and password with the wget command.

Many redirects can also be handled by adding command-line options like –max-redirect. If you need to use wget to download a file from a protected site, provide credentials securely.

4. DNS, proxy and timeout issues

Network failures can occur when you run the wget command on unstable connections.

Increase ‘retry attempts’ or ‘timeouts’ using extra flags. If you must go through proxies, wget supports HTTP proxies and cookie handling.

Wget commands can also copy local or external files when configured with the right options.

5. Advanced troubleshooting

Beyond common issues, some advanced cases may require notable fixes. Here are extra troubleshooting tips for handling wget commands effectively:

- Use wget HTTPS when secure servers reject HTTP requests, especially for APIs and modern websites.

- If a wget copy operation fails repeatedly, clear cache files and retry with the –no-cache flag.

- On Linux, confirm the wget command in Linux is correctly linked to /usr/bin/wget to avoid path conflicts.

- In custom environments, the Linux wget command may fail if dependencies like SSL libraries are outdated.

- When you use the wget command to download file archives from APIs, check response headers for blocked or redirected requests.

- Check online for advanced wget examples that address scripting issues with loops, proxies or custom agents.

- Knowing how does wget work internally helps diagnose recursive retrieval issues and incomplete downloads.

- Automate recovery by scheduling cron jobs to run the wget command when earlier downloads fail due to unstable connections.

- Fine-tune wget commands with options like –waitretry and –random-wait to avoid server blocking.

- If you’re unsure what does wget do in a failed script, test in –debug mode to capture details.

Tip: If you’re unsure about making changes directly on a live website, you can use Bluehost’s staging environments to test wget commands safely.

A staging site lets you experiment with downloads, scripts and automation without risking downtime.

Once you’re confident everything works, you can push changes live with one click.

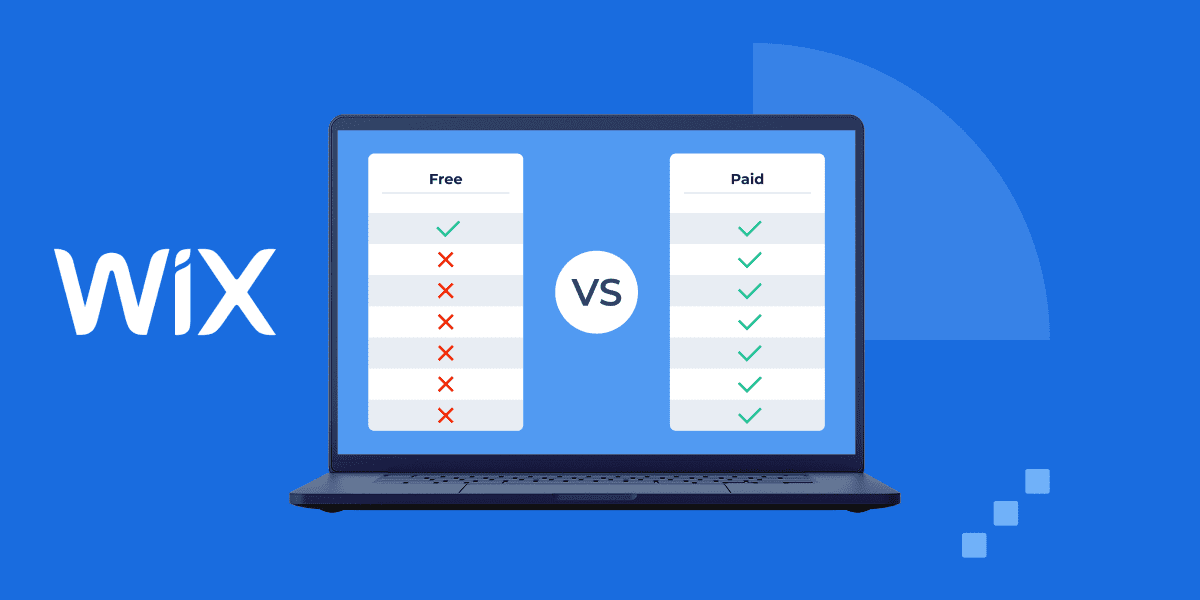

Wget vs curl: Which should you use?

The wget command and curl are both popular command-line tools for transferring files.

Before diving deeper into their individual strengths, here’s a quick comparison table to highlight the key differences:

Comparison table: Wget vs curl

| Feature | Wget | Curl |

| Primary use | Automating downloads, scripting and mirroring sites | API requests and single transfers |

| Installation | Easy to install wget on Linux, macOS and Windows | Preinstalled on many systems |

| Resume downloads | Supports partially downloaded file recovery and partial downloads | Requires manual configuration |

| Protocols supported | GNU Wget handles HTTP, wget https and FTP | Wide set, including SMTP and LDAP |

| Logging | Creates wget log and wget log file for monitoring | Standard output unless redirected |

| Website mirroring | Supports recursive retrieval and downloaded website creation | Not designed for full site downloads |

| Ease of use | Simple wget syntax and beginner-friendly wget commands | Requires knowledge of advanced flags |

| Output handling | Allows custom default output or rename options | Relies on manual redirection |

| Use cases | Backup scripts, automation, testing, etc. | API testing, integration with applications |

Wget commands are designed for downloading files, automating tasks and creating local copies of remote data. Curl focuses more on APIs and advanced request handling.

Why choose Wget?

- The wget command in Linux is pre-installed on many systems, making it easy to get started.

- You can easily install wget using package managers across various distributions.

- GNU Wget supports HTTP, HTTPS and FTP, making it versatile for different sources.

- Wget commands can resume partially downloaded files and continue partial downloads without requiring a restart.

- It can also mirror a downloaded website and save it for offline use.

- You can use wget to download file batches or run scheduled jobs.

- Wget allows background jobs with wget log file output, making monitoring easier.

- With recursive retrieval, wget commands can create local versions of entire sites.

Why choose curl?

- Curl offers more granular control over HTTP requests and supports a wider set of protocols.

- It’s preferred when working with APIs or when testing different command line options.

- Curl is not ideal for large automation tasks like mirroring, where wget commands excel.

Which should you use in 2025? Choose wget for automation, recursive downloads and scripting. Pick curl when you need advanced request control and API testing.

Final thoughts

The wget command remains one of the most reliable tools for downloading files directly from the command line. It is easy to install wget and confirm wget installed correctly across Linux, macOS and Windows systems.

Beginners often start by learning how to use wget in a step-by-step manner. Understanding what does wget do helps when exploring more advanced options like recursive retrieval and background jobs.

Many wget examples demonstrate how to automate tasks, schedule downloads or test custom headers using various wget commands.

Whether you’re running the Linux wget command on servers or desktop systems, the process stays consistent and predictable.

Knowing how does wget work at a deeper level makes troubleshooting easier and scripting more reliable. For advanced tasks, our VPS hosting gives you root access, secure SSH and dedicated resources to run wget efficiently.

Ready to scale your downloads and automate your processes? Explore Bluehost VPS hosting today!

FAQs

You can use wget by typing wget [URL] in the command line. It downloads the file into current working directory. You can also run wget with additional flags for automation and logging when downloading a single file.

The wget command is used to download files, mirror websites and automate transfers without requiring a browser to be opened. Many administrators use wget to schedule recurring downloads across servers in most Linux distributions.

To transfer files, use wget with FTP or HTTPS links. It supports both single transfers and bulk downloads. wget also saves details in a wget log for easier tracking, including downloaded documents for records.

You can use wget with the recursive flag -r to mirror a site. This lets you save and browse it offline. Each downloaded website can then be stored and archived for future use or saved as local files to create local versions.

Wget automates downloads, while curl is better for testing requests. You usually use wget for scripts and automation. If you wonder what does wget do, it focuses only on downloading tasks and the overall download process.

On Linux, install wget with the following command: [sudo apt install wget -y]. This installs the wget package and ensures wget installed properly. On macOS, run brew install wget, while Windows users get binaries from GNU Wget with default output.

Yes. Use wget with the -c flag to resume a wget copy of a partially downloaded file without restarting. This prevents wasted bandwidth on partial downloads and avoids overwriting an existing file.

You can use wget https for secure downloads and FTP for servers. wget works with multiple protocols by default. Many admins prefer to install wget with SSL support preconfigured to handle any local or external file.

Standard wget commands include wget [URL] to download, -O to rename files and -r for recursive retrieval. Beginners also use wget to test basic wget syntax and basic syntax before automation.

Wget creates a wget log or wget log file during downloads. Check logs when you run wget and errors occur. Once wget installed, reviewing these logs in the current directory is the best way to troubleshoot.

You can use wget with -O to rename or -i to read an input file for batch downloads. Wget saves details in a wget log so you can track completed and failed tasks, including both local files and remote ones.

On Arch Linux, run sudo pacman -S wget to install wget quickly. This installs the latest GNU Wget package and ensures wget installed correctly with HTTPS support.

The basic syntax is wget -r -p [URL]. It lets you download an entire website and store a wget copy locally. Wget confirms each transfer in a wget log once completed, and downloaded documents are saved automatically.

You can provide an input file containing multiple URLs to download at once. First, install wget and ensure wget installed correctly. Then run wget -i file.txt. Wget also supports secure transfers with wget https and username and password options.

Yes. wget is a free utility designed for retrieving files from the Internet. You can download a single file or resume a partially downloaded file easily.

Yes. Wget is better for automation, bulk downloads & mirroring; curl is better for API requests.

Install it using your distro’s package manager: sudo apt install wget -y (Ubuntu/Debian) or sudo yum install wget -y (CentOS).

Yes. Bluehost VPS hosting gives you shell access with root privileges, letting you run Wget for automation, mirroring or large file downloads.

Write A Comment