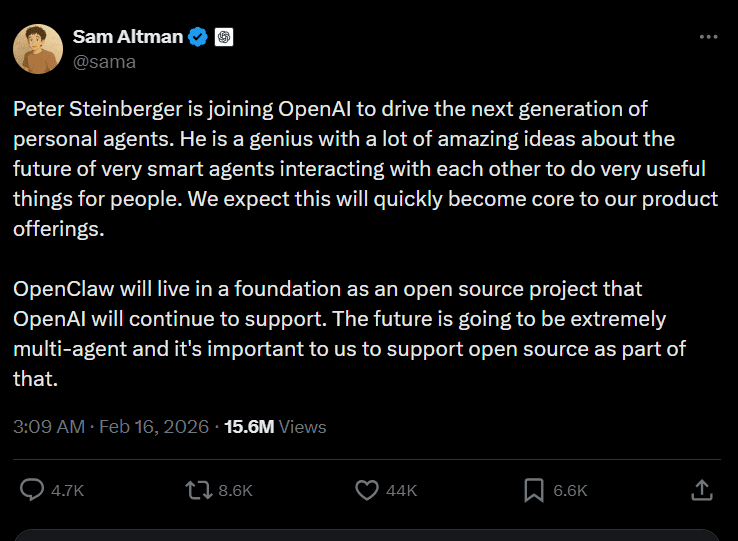

OpenAI has hired Peter Steinberger, the creator of OpenClaw, signalling a clear strategic direction: the company is doubling down on autonomous AI agents.

The hire was confirmed publicly by CEO Sam Altman on 15th February, who described Steinberger as joining to help advance OpenAI’s work on personal AI systems capable of taking action on behalf of users.

The announcement was concise, but the implications are broader. This is not about improving chat responses. It’s about moving beyond chat entirely.

Who is Peter Steinberger and why does he matter?

Peter Steinberger is best known as the founder and creator of OpenClaw, an open-source AI agent framework designed to execute tasks across applications.

OpenClaw, previously known as OpenClawd during its early development phase, gained traction among developers for one reason: it moved beyond conversational demos and focused on execution.

Rather than simply generating reactive text responses, OpenClaw agents were designed to log into tools, perform actions, retrieve data and complete multi-step workflows with minimal user intervention. That shift from conversation to capability is what set the project apart within the AI developer community.

Within the AI ecosystem, OpenClaw drew attention for:

- A developer-first architecture

- Transparent, open-source code

- Early demonstrations of cross-app task automation

- Active community experimentation

Steinberger’s background blends technical depth with product pragmatism: a profile aligned with OpenAI’s current priorities.

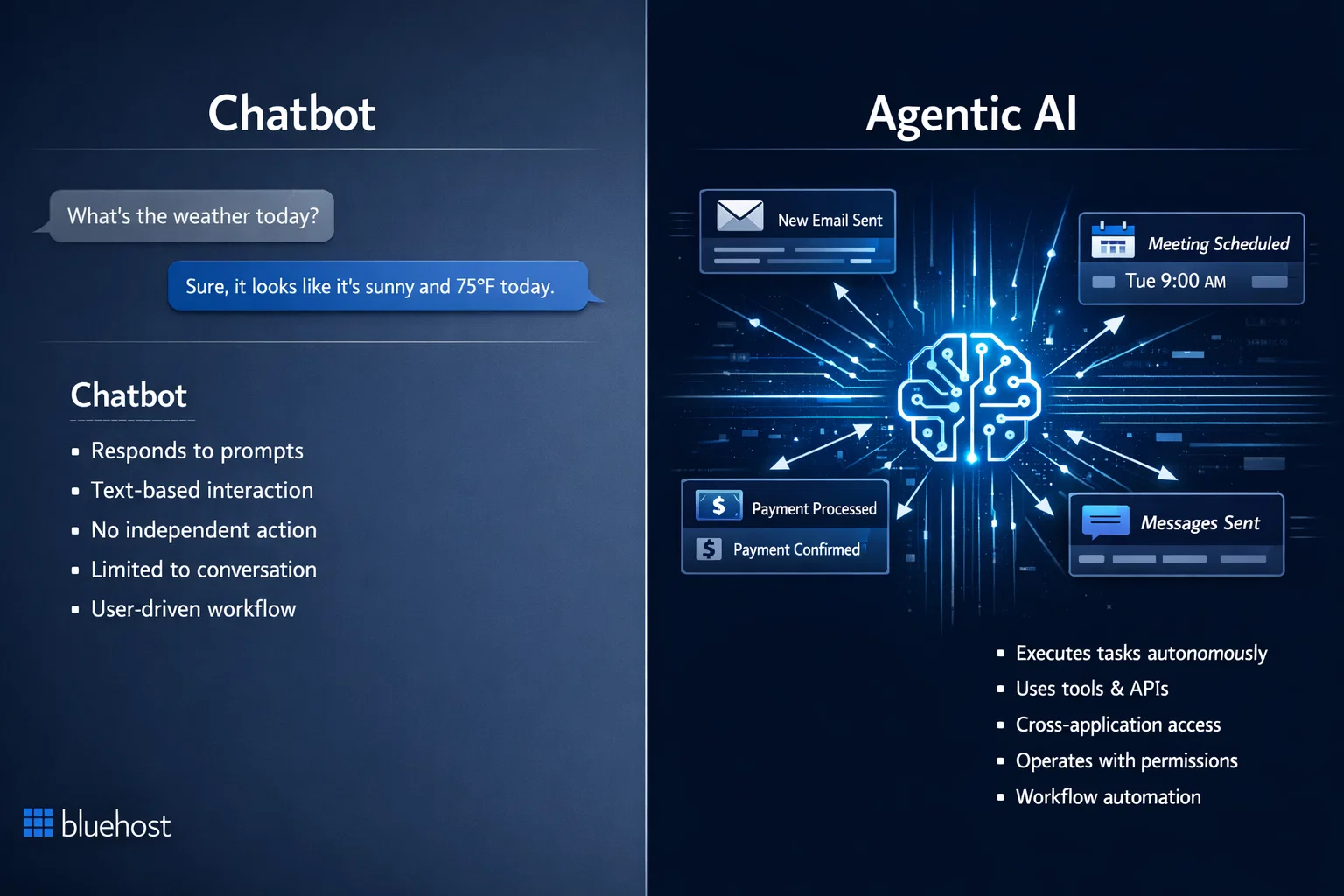

What makes OpenClaw different from a chatbot?

The distinction between a chatbot and an autonomous agent is operational, not cosmetic.

A chatbot responds to prompts. An autonomous agent executes tasks.

OpenClaw agents are designed to:

- Manage inboxes by triaging and responding to emails

- Book reservations

- Check in for flights

- Interact with third-party web interfaces

- Operate within messaging platforms

The key difference is tool use. Instead of returning instructions, the agent performs the action.

This is a structural shift in AI design. Chat interfaces are passive. Agents are active systems with access to credentials, tools and workflows.

OpenAI’s hiring of OpenClaw’s creator suggests that this execution layer, not the conversation layer, is becoming central to its roadmap.

Why the shift to OpenAI?

In a post titled “OpenClaw, OpenAI and the future,” Peter Steinberger confirmed he is joining OpenAI to “be part of the frontier of AI research and development and continue building.”

He said OpenClaw’s success revealed an “endless array of possibilities,” but his next goal is broader: to build an AI agent “that even my mum can use.” Achieving that requires deeper safety work and access to cutting-edge models and research. Partnering with OpenAI, he argued, is the fastest way to scale that vision globally. “What I want is to change the world, not build a large company,” he wrote.

Steinberger also emphasized that OpenClaw will remain open source. OpenAI has committed to supporting the project, which he plans to formalize as a foundation. The aim is to keep it a space for developers and users who want ownership of their data, while expanding support across more models and companies.

OpenClaw’s rise exposed the security gaps in AI agents

OpenClaw’s rapid breakout highlighted both the promise and the risk of autonomous AI agents. The system did impress its users by taking real actions such as managing inboxes, booking travel and operating across apps. However, Bloomberg reports that its security model remains a work in progress.

Researchers have pointed to specific risks. They include prompt injection attacks, mishandling of stored credentials and the potential for unintended or “rogue” actions when agents are granted broad permissions.

Because these systems can log into accounts and execute tasks autonomously, vulnerabilities carry a much larger blast radius than traditional chatbots.

That expanding blast radius is now prompting a broader question for businesses: not just how these agents behave, but where and under what infrastructure safeguards they should operate.

How is OpenClaw reshaping infrastructure decisions?

As AI agents begin logging into systems and executing tasks autonomously, security risk shifts from model behavior to infrastructure design. With broader permissions comes a larger blast radius if something goes wrong.

That expanding blast radius is beginning to influence infrastructure conversations inside some organizations. As AI agents move from experimental tools to systems with login credentials and operational authority, security teams are assessing where those agents execute and how tightly they are contained.

Rather than running them in general-purpose environments, some businesses are placing agent workloads in isolated virtual machines, VPS hosting environments or segmented network zones designed to limit lateral movement and restrict outbound access.

The objective is not to eliminate model risk, but to reduce the potential impact if an agent is compromised or misconfigured.

The broader takeaway: as AI shifts from answering questions to taking action, security and governance may become the limiting factor in how fast agents scale.

The industry race to build AI agents

OpenAI’s move positions it squarely in the emerging agent race.

In enterprise contexts, agents could become embedded in CRM systems, ticketing platforms, procurement workflows and analytics dashboards.

In consumer contexts, agents may handle travel planning, subscription management, inbox filtering and financial tracking.

If agents mature technically, they could evolve into a new computing abstraction layer, sitting between users and software applications.

That possibility introduces regulatory questions:

- Who is liable for agent mistakes?

- How are permissions audited?

- How is user consent managed?

- What constitutes unauthorized action?

As models gain operational agency, regulatory scrutiny is likely to increase.

What comes next

The bigger shift isn’t from chat to agents; it’s from interface to infrastructure.

Chatbots improved how we interact with software. Agents will sit between us and software. That’s a far more consequential change.

While early use cases focused on tasks like learning how to build a website with ChatGPT, the next phase of AI goes deeper; shifting from assistive tools to systems that execute actions across software environments.

Whoever wins this phase won’t just have the smartest model. They’ll have the safest, most reliable execution layer with strong permission controls, auditability and governance built in. OpenClaw’s rise shows demand for delegation is real. Its security gaps show the infrastructure isn’t ready yet.

The next AI leader won’t be defined by how well it talks. It will be defined by how safely it acts.

Write A Comment