Key highlights

- Understand what makes dedicated hosting different and why it still matters for performance, control and long-term scalability.

- Learn how to identify and resolve common dedicated hosting problems, including hardware failure, software bugs and DDoS attacks.

- Explore a step-by-step troubleshooting process to resolve server issues using diagnostics, logs and resource monitoring tools.

- Discover the signs that indicate it’s time to seek professional support and how Bluehost helps keep your server running smoothly.

Frustrated by unexpected server crashes, email issues or sluggish site performance, even on your dedicated hosting plan? You’re not alone. With full control comes full responsibility and small missteps can lead to big problems: from downtime that hurts your brand to security gaps that expose your data.

Unlike shared hosting, where most of the backend is managed for you, dedicated servers put everything in your hands: software updates, firewalls, backups, resource monitoring and recovery plans. One overlooked patch or misconfigured setting can trigger a domino effect that takes hours (or days) to resolve, resulting in serious dedicated hosting problems.

To run a stable environment, you need to detect issues early, fix them fast and know when to escalate to expert support.

This blog breaks down the most common dedicated hosting problems and server maintenance issues, from network threats to configuration errors. It also shows you how to troubleshoot and prevent them before they impact your users.

TL;DR: Dedicated hosting problems & fixes

| Category | Details |

| Most common issues | Overloading, hardware failure, outdated patches, DDoS, backups |

| Quick fixes | Monitor resources, automate updates, use WAF, test backups |

| When server is down | Contact host, notify clients, lockdown security, restore backups |

| Bluehost edge | 99.9% SLA uptime, DDoS protection, 24/7 support, automated backups |

What makes dedicated hosting different?

Dedicated hosting provides your website with full access to server resources. Unlike shared or VPS hosting, where multiple users share bandwidth and memory, a dedicated server machine ensures all processing power and disk space are reserved for your site alone.

This setup is ideal for running complex server applications such as a terminal server or DNS server. It also supports background tasks like the print spooler service and can be resource-intensive on shared environments, especially when a printing device is attached.

With dedicated hosting, you can choose your operating system, whether that’s a Windows server or Linux. This flexibility gives you more control over performance tuning and system management.

Finally, dedicated hosting makes it easier to troubleshoot software issues quickly. Having full access to the server means you can apply fixes, optimize settings and ensure maximum stability.

However, managing a server goes beyond control. Without proper upkeep, issues like misconfigurations, security vulnerabilities and performance slowdowns can arise quickly.

These are just a few examples of dedicated hosting problems that often stem from overlooked updates, weak monitoring or poor configuration.

Without proper server monitoring, early warning signs, such as hardware degradation, outdated software or resource bottlenecks, can easily be missed.

Despite the complexity, dedicated hosting remains essential for high-traffic websites, eCommerce business operations and mission-critical applications that demand speed, stability and customization.

How do different hosting types compare?

Different hosting types offer varying levels of performance, control, scalability and cost. Let’s break down how dedicated hosting compares to shared and VPS options across key performance and management factors.

| Feature | Shared hosting | VPS hosting | Dedicated hosting |

| Resources | Shared with other users | Shared (virtualized) | Fully dedicated to one user |

| Performance | Slower during traffic spikes | Moderate, varies by plan | High, stable even during peaks |

| Security | Higher risk of malware | Better isolation | Full control, fewer security issues |

| Server management | Minimal, managed by host | Some control | Full manual management and server monitoring |

| Customization | Limited | Some customization | Full root access and flexibility |

| Best for | Blogs, portfolios | Growing businesses | Large-scale, resource-heavy sites |

This comparison highlights why dedicated hosting is ideal for businesses that require power, flexibility and reliability without compromise.

While the advantages are clear, managing a dedicated server also presents challenges, especially if it’s not properly maintained or configured. Let’s explore the most common problems and how to fix them.

What are the most common dedicated hosting problems and how to fix them?

Even the most advanced dedicated server won’t remain stable without regular upkeep. Ignoring basic tasks often leads to server maintenance problems that affect uptime, performance and data security, especially in high-demand environments.

Use this quick-reference table to identify dedicated hosting problems, understand what causes them and apply proven solutions with confidence:

| Issue | Description | Fix |

| Server overloading | Too many tasks or users slowing the system | Load balancing, optimize cron jobs |

| Hardware failure | Aging drives, memory or cooling parts | Use RAID, monitor and replace hardware |

| Outdated software | Unpatched bugs or vulnerabilities | Automate updates, use staging environments |

| DDoS attacks & vulnerabilities | A flood of malicious traffic or security loopholes | Install WAF, apply rate limits, harden security |

| Misconfigured firewalls | Blocks key services or leaves gaps | Audit rules, apply least privilege, close unused ports |

| Backup failures | Missing or corrupt backups | Automate and test backups regularly, store off-site |

| Poor monitoring | Misses early warnings or issues | Use alerts, log analyzers, dashboards |

| Network issues | DNS errors, packet loss or bad routing | Use traceroute, DNS testing, fallback routes |

These dedicated hosting problems can disrupt even the most resilient hosting setup, so knowing how to handle each one keeps your site safe.

1. Server overloading

When your server handles more requests than it was designed for, it slows down or crashes. This is a frequent trigger of server maintenance problems, often caused by unoptimized cron jobs or inefficient code.

Fix: Distribute workloads using load balancing or mirrored servers. Adjust the cron job frequency and remove redundant background processes to ease resource usage.

2. Hardware failure

Physical components, such as hard drives, CPUs or memory, can wear out over time. Without early detection, this can lead to data loss, outages or total system failure.

Fix: Implement RAID storage to mirror critical data and reduce risk. Monitor hardware health regularly and replace aging parts before they fail.

3. Software bugs or outdated patches

Unpatched software can introduce instability and open security holes. These problems are among the most common dedicated hosting problems, especially when updates are ignored.

Fix: Set up automated updates and patch management. Always test new software versions in a staging environment before applying them to your live server.

4. DDoS attacks and security vulnerabilities

DDoS attacks flood your server with fake traffic, straining resources and causing downtime. Choosing DDoS protected dedicated server hosting ensures these risks are minimized and helps users access their site without disruption.

Fix: Use a Web Application Firewall (WAF), apply rate limiting and harden server settings to filter traffic and block known vulnerabilities.

5. Misconfigured firewalls

Firewalls that are too strict or poorly configured may block critical services or leave ports vulnerable to attack. This leads to access issues or weak points in your server’s defenses.

Fix: Regularly audit firewall rules and apply the principle of least privilege. Close any unused ports and review inbound and outbound rules for accuracy.

6. Backup failures

Backups are essential, but if they’re outdated, incomplete or corrupted, you’ll have no recovery path in the event of a crash. Backup failures often go unnoticed until it’s too late.

That’s why we at Bluehost include automated backups with CodeGuard, so your WordPress site is always protected with daily snapshots and one-click restores.

Fix: Schedule automated, off-site backups and verify their integrity through regular testing. Keep multiple restore points to cover different failure scenarios.

7. Poor monitoring

Without real-time monitoring, you risk missing resource bottlenecks, unauthorized access or early signs of hardware failure. This delays response time and increases damage.

Fix: Enable alerts and integrate server logs with centralized monitoring tools to enhance visibility and control. Track CPU, RAM, disk usage and login activity to identify issues early.

8. Network issues

DNS errors, slow routing or packet loss can make your site unreachable, even if your server is working fine. These problems are often caused by misconfigurations or issues at the ISP level.

Fix: Run DNS tests, traceroute and latency checks to identify the root cause of the issue. Set up fallback DNS or alternate routing paths to improve reliability.

Also read: Common DNS Issues and How to Solve Them

When these dedicated hosting problems occur, your response determines whether downtime is brief or business-impacting. Next, we’ll walk through the immediate steps to recover from a server outage.

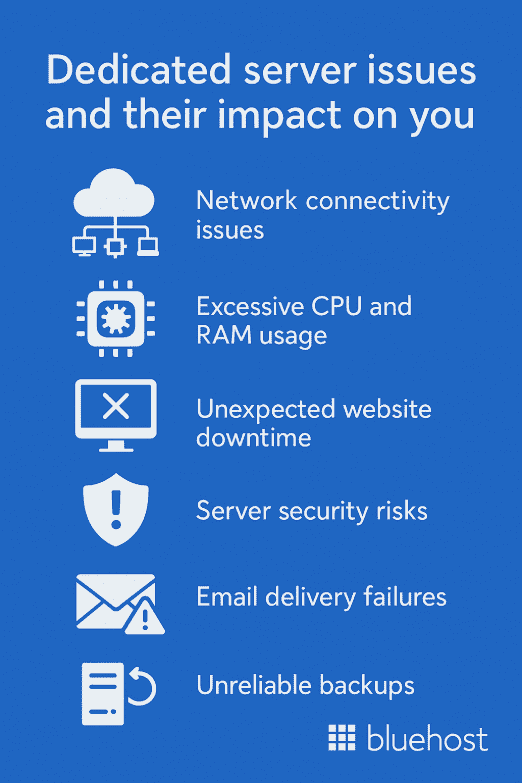

How can these dedicated server problems affect you?

Dedicated server problems can hurt your business by slowing websites, causing email failures, lowering SEO rankings and reducing customer trust.

Knowing how to solve server problem issues fast with proper server troubleshooting is critical. And when you’re not sure what to do if server is down, even a few minutes of downtime can cost sales and damage reputation. Here’s how these issues can affect you:

1. Network connectivity issues

Slow or unstable connections are some of the most common server problems. For example, an eCommerce store losing connectivity during checkout can frustrate buyers and cause abandoned carts.

These server issues often happen due to faulty routers, misconfigured DNS or bandwidth limits. Server troubleshooting tools like Ping or Traceroute help solve server problem bottlenecks quickly in dedicated hosting.

2. Excessive CPU and RAM usage

Overloaded resources are a classic dedicated hosting problem. If CPU or RAM spikes, websites crash or load painfully slow. Think of a streaming site buffering endlessly during peak hours. Those are server issues caused by poor resource management.

The best way to solve server problem spikes is to optimize apps, upgrade hardware or automate monitoring.

3. Unexpected website downtime

Downtime is one of the most damaging server issues. For example, if a SaaS platform goes offline during signups, it loses revenue instantly. Knowing what to do if server is down, such as switching to backups or contacting your provider is crucial.

These dedicated hosting problems are often tied to hardware failure, DDoS attacks or misconfigurations.

4. Server security risks

Security-related server issues are common server problems in today’s landscape. A server vulnerable to brute force attacks or malware can lead to data being stolen. For instance, a compromised WordPress site can redirect users to malicious pages.

You can solve server vulnerabilities by using firewalls, keeping systems patched and enabling strong authentication. Regular server troubleshooting helps maintain continuous monitoring and stability.

5. Email delivery failures

Missed or delayed emails can damage credibility. For example, if invoices fail to reach remote clients, it creates confusion and delays payments. One common misconfiguration is when mail servers are set to improperly accept relay messages from unauthorized sources. This increases the risk of your IP being blacklisted and causes legitimate business emails to bounce or get flagged as spam.

These dedicated hosting problems are common server issues caused by IP blacklisting or port misconfigurations. When figuring out how to solve server problem email errors, admins should verify DNS records, adjust spam filters and rely on monitoring tools.

6. Unreliable backups

One of the worst server issues is unreliable backups. If backups fail and the server crashes, businesses risk permanent data loss. For example, a digital agency losing client files due to failed backups is a costly mistake.

To solve server problem risks here, always test backup restores and automate the process. This way, you’re prepared for what to do if the server is down.

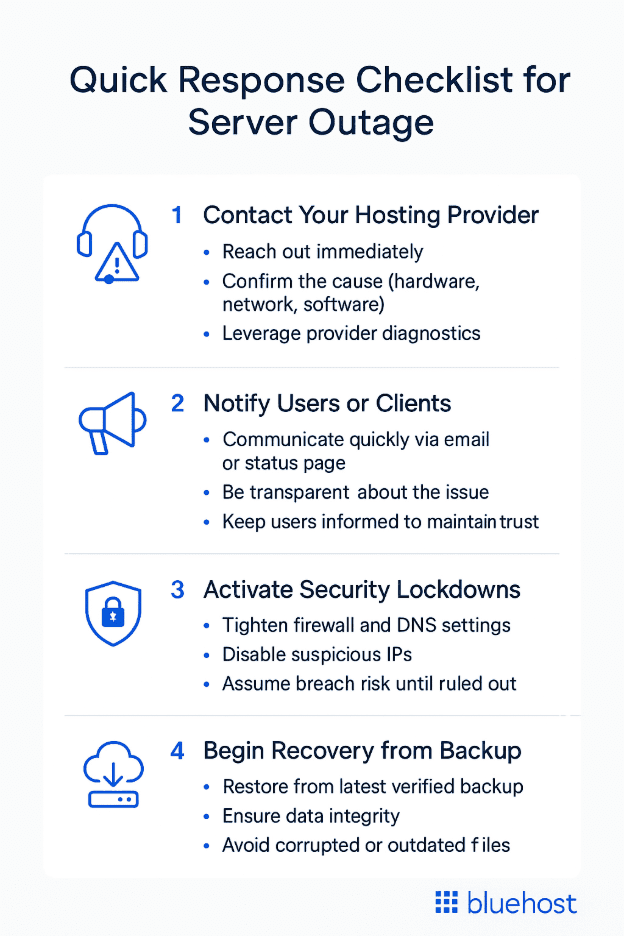

What should you do immediately if your dedicated server goes down?

A dedicated server crash can result in downtime, data loss or security threats. Whether caused by hardware failure, a software bug or an external attack, your response needs to be immediate and focused.

Understanding backup strategies is a vital part of how to solve server problems effectively, since it helps restore operations faster.

Follow these steps to regain control and initiate a quick recovery, especially when facing urgent dedicated hosting problems that demand swift action.

Step 1: Contact your hosting provider

Notify your hosting provider as soon as you become aware of the outage. Their team may already be tracking alerts or log activity that can help diagnose the cause. This is often the first step in how to solve server problem, since the provider can confirm whether the issue is internal or widespread.

Early communication speeds up resolution and clarifies whether the issue is isolated, network-related or tied to broader infrastructure problems.

Step 2: Notify users or clients

If the outage affects customer-facing services, communicate the information clearly and promptly. Use email, social media or a status page to explain the disruption and reassure users that recovery is underway.

Timely updates maintain trust and reduce confusion during unplanned downtime.

Step 3: Activate security lockdowns

Until the root cause is known, treat the situation as a potential security risk. Tighten firewall rules, adjust DNS settings if needed and block any suspicious IP addresses.

Taking these precautions early helps prevent unauthorized access, malware injection or further system damage.

Step 4: Begin recovery from backup

Start restoring services using your most recent, verified backup. Ensure the backup file is complete and reflects a stable version of your system.

If your backups are current and stored off-site, recovery should be efficient. If not, you risk prolonged downtime and permanent data loss.

A quick response limits the damage, but recovery isn’t complete without knowing what went wrong. To ensure long-term stability, the next step is to troubleshoot the issue and resolve its root cause using a clear and methodical approach.

Note: Bluehost customers benefit from 24/7 support that can step in immediately if downtime occurs.

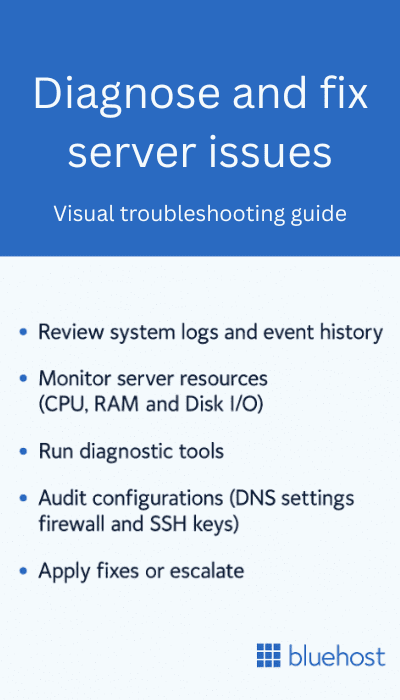

How can you effectively troubleshoot dedicated server issues?

Troubleshooting dedicated servers requires a methodical, hands-on approach to quickly detect, diagnose and resolve critical performance issues. You need to identify root causes quickly, before performance drops, data is lost or users are impacted.

Follow these steps to diagnose and resolve common server issues with precision.

1. Review system logs and event history

Server logs are the first place to look when an issue arises. They provide critical insight into system behavior, failed services, blocked access or unexpected shutdowns.

Scan error, firewall and application logs for time-stamped events that signal unusual activity. Look for repeated failures, unauthorized attempts or patterns linked to recent changes.

2. Monitor server resources (CPU, RAM and Disk I/O)

Performance slowdowns often stem from resource exhaustion. When your server runs out of memory or hits disk bottlenecks, it may slow down or crash entirely.

Use terminal tools or your dashboard to monitor real-time resource usage. Identify any processes that cause CPU spikes, memory leaks or high I/O delays, which impact speed and stability.

3. Run diagnostic tools

Use command-line tools to identify network problems. These help uncover blocked connections and latency issues. These tools are essential for tracking delays, connection failures or protocol errors.

- Ping: Test network response time and packet loss

- Traceroute: Map the network path to detect latency or routing failures

- Netstat: View open ports and ongoing connections

- Curl: Test HTTP, database or API response from a specific server or domain

Use these to pinpoint dropped connections, domain resolution errors or slow data transfer rates.

Pro tip: In addition to these command-line tools, you can also rely on real-world monitoring platforms like UptimeRobot for uptime alerts, GTmetrix for page speed analysis and Google PageSpeed Insights for performance scoring and optimization recommendations. These tools help you validate server health and user experience beyond just the backend checks.

4. Audit configurations (DNS settings, firewall and SSH keys)

Misconfigured DNS records, firewall restrictions or outdated access credentials can cause service interruptions or expose your server to threats.

Review DNS zone files for typos or misrouted records. Check firewall access control lists for accidental blocks and update or rotate SSH keys to maintain secure access.

Also read: How to Manage DNS Records for Your Domain

5. Apply fixes or escalate

If the issue is clear, implement a fix right away, restart affected services, update configurations or restore from the latest backup. If the problem remains unclear or unresolved, escalate to professional support.

Delaying escalation can increase downtime and the risk of security breaches or permanent data loss. Acting early ensures faster resolution and limits impact on your users.

Effective troubleshooting protects your infrastructure, minimizes disruptions and keeps your server operating at peak performance. A systematic approach not only solves problems, it helps prevent them from recurring.

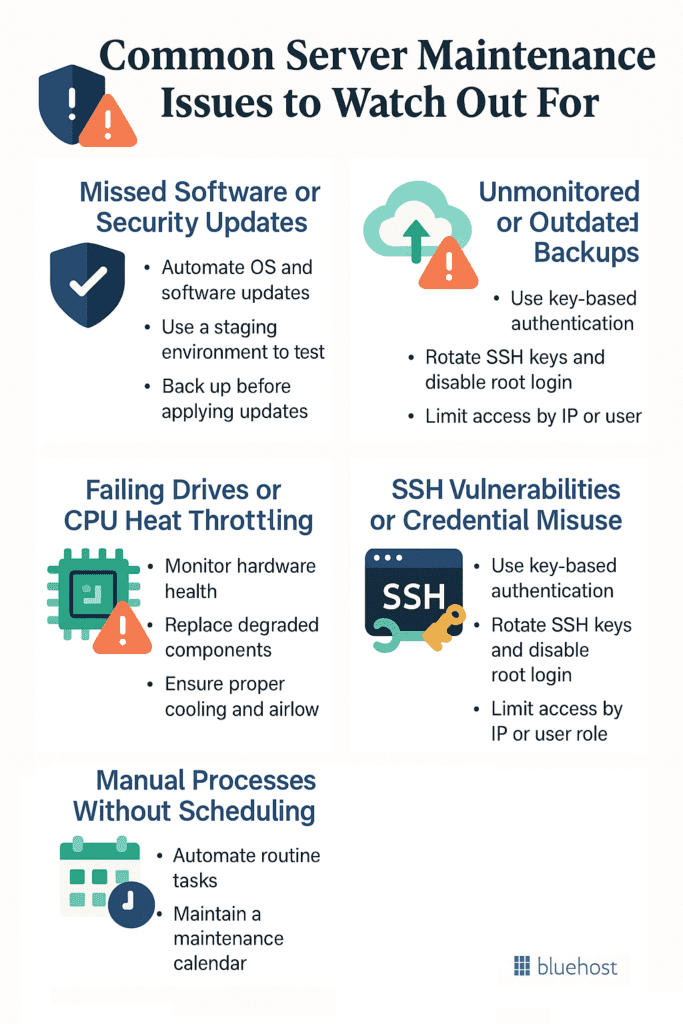

What server maintenance issues can lead to common server problems?

Even the most advanced dedicated server won’t remain stable without regular upkeep. Neglecting routine maintenance tasks can lead to performance degradation, data loss and in some cases, full system failure.

Use this checklist to identify and resolve server maintenance issues before they impact your infrastructure.

1. Missed software or security updates

Running outdated software increases your risk of security vulnerabilities, malware infections and performance issues. It also creates compatibility problems with modern applications and services.

- Automate updates for your operating system, control panel and installed server software.

- Test updates in a staging environment before pushing changes live.

- Always create a fresh backup before applying major updates or patches.

2. Failing drives or CPU heat throttling

Aging hardware components, especially drives and CPUs, can silently degrade performance. Overheating or hardware wear can lead to sudden slowdowns, corrupted files or complete outages.

- Monitor hardware health using your hosting dashboard or command-line monitoring tools.

- Replace worn or degraded components before failure occurs.

- Ensure your server’s cooling system is properly maintained and airflow remains unobstructed.

3. Unmonitored or outdated backups

Backups are only useful if they’re current, tested and stored securely. In an emergency, missing or corrupted backups can turn a minor issue into a full-blown crisis.

- Automate scheduled backups to a reliable off-site or cloud-based location.

- Test your restore process regularly to ensure data can be recovered without error.

- Keep multiple restore points and verify that backups are running as expected.

4. SSH vulnerabilities or credential misuse

Weak SSH access policies or compromised credentials put your entire server at risk. Unauthorized access can lead to data breaches, malware injection or complete loss of control.

- Use strong, key-based authentication instead of passwords whenever possible.

- Regularly rotate SSH keys and disable root login for added protection.

- Restrict access to trusted IP addresses or user roles with minimum permissions.

Also read: What is SSH Access and How to Enable It in Your Hosting Account

5. Manual processes without scheduling or fallbacks

Relying on manual steps for tasks like syncing files or applying updates creates inconsistency and risk. If the person responsible is unavailable, essential processes may be delayed or missed entirely.

- Automate repetitive admin tasks using cron jobs or scheduled scripts.

- Build a clear maintenance calendar that includes frequency and responsibility.

- Document all procedures and create fallback plans to ensure continuity during staff absences.

Also read: Cron Job Basics

Neglecting maintenance can compromise performance and security, which is why a proactive, managed approach makes all the difference. Let’s examine how Bluehost helps you prevent these issues before they affect your site.

How do we at Bluehost prevent these hosting issues?

At Bluehost, we understand the importance of uptime, performance and security to your business. That’s why our platform is built to help you avoid dedicated hosting problems before they start.

From proactive monitoring to built-in protection, here’s how we help you stay secure, stable and in control.

1. 99.9% uptime guarantee

Consistent uptime is essential to your site’s reliability and user experience. Our infrastructure is designed to deliver maximum stability, even under demanding workloads or traffic surges. With advanced hardware and optimized configurations, we ensure your site stays available when it matters most.

- All dedicated hosting plans are backed by a 99.9% uptime guarantee, so your site stays online and accessible with minimal interruptions.

- NVMe storage delivers fast read/write speeds, minimizing delays during high activity.

- Unmetered bandwidth allows your site to manage large volumes of traffic without performance degradation.

Also read: Uptime Monitoring

2. Real-time monitoring and incident response

Detecting and responding to issues early is key to avoiding downtime. Our 24/7 monitoring tools continuously watch over your server, allowing for immediate action to be taken, often before you’re even aware of a problem.

- Real-time resource monitoring tracks CPU, memory and disk usage to identify overloads early.

- Automated alerts notify our support team of unusual patterns or critical failures.

- Fast provisioning ensures your server is deployed quickly and updated promptly for immediate readiness.

Also read: Dedicated Server Downtime: 6 Causes & 8 Fixes

3. DDoS mitigation, WAF and Secure configurations

Security is a top priority in dedicated hosting. We use multiple layers of defense to block threats, protect your data and reduce the chance of service disruption caused by external attacks or system breaches.

- DDoS protection helps keep your server online by absorbing or blocking malicious traffic.

- Our Web Application Firewall (WAF) benefits your site by actively filtering out suspicious or harmful requests in real time, before they reach your server.

- RAID storage protects data by distributing it across multiple drives for redundancy and durability.

- Every dedicated plan includes a free SSL certificate to encrypt site traffic and secure sensitive transactions.

Also read: Is My Website Protected Against DDoS Attacks?

4. 24/7 support by hosting experts

Even experienced developers need support. That’s why our team is available around the clock to help with technical issues, performance concerns or custom configurations. You get fast, reliable help when and where you need it.

- Our support team includes Linux-certified professionals trained in server optimization, security and diagnostics.

- A free site migration tool makes it easy to move your site to Bluehost without downtime or added risk.

- A 30-day money-back guarantee gives you the flexibility to evaluate our services with confidence.

Also read: Migration Services: Website and Email Migration

5. Resource transparency and isolated VPS environments

Understanding how your server performs is key to optimizing speed, capacity and uptime. With complete access and visibility, you can make data-driven decisions and adjust your environment as your needs evolve.

- Our intuitive dashboard displays real-time usage data for CPU, RAM, bandwidth and storage.

- Three dedicated IPs are included for secure application hosting, improved email delivery and SSL configuration.

- Custom server environments let you install the software and configurations your projects require.

With these systems in place, we help prevent most hosting issues before they start, keeping your server secure, your site online and your business running smoothly. However, when routine fixes are no longer effective or critical systems are at risk, it’s essential to know when to seek expert support.

When every second counts, trust a solution that’s built for performance and reliability. Explore Bluehost dedicated hosting plans to secure your site with unmatched control and power.

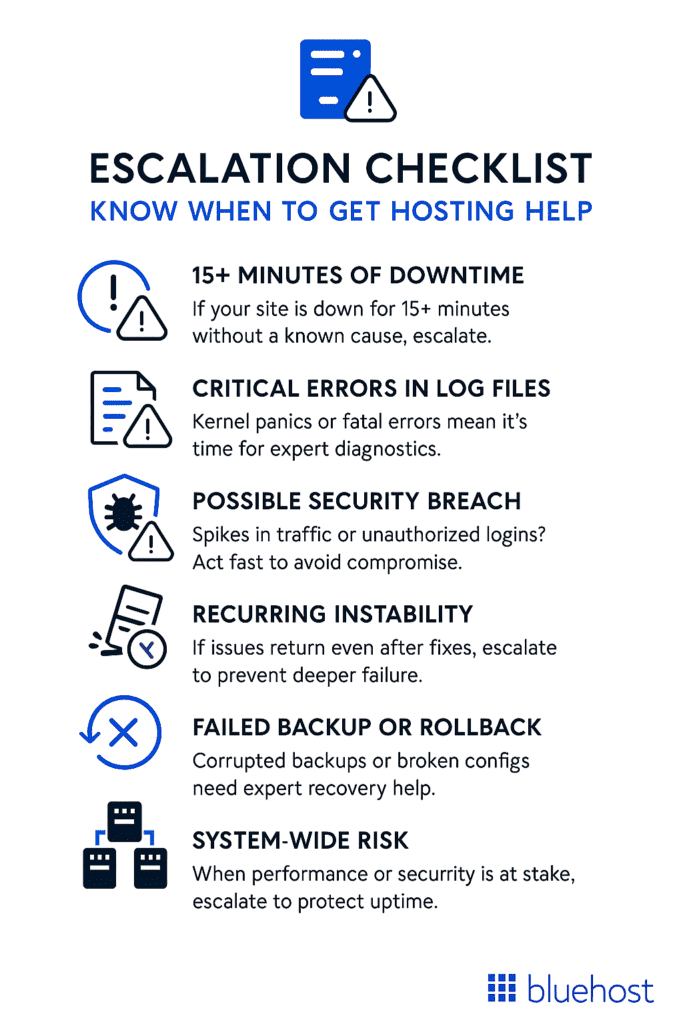

When should you escalate to professional hosting support?

Some dedicated hosting problems go beyond day-to-day fixes. If your server becomes unresponsive, shows repeated failures or raises potential security risks, it’s time to get expert help.

Escalating at the right moment prevents further downtime, protects your data and keeps your infrastructure stable.

Below are key situations when contacting your hosting provider:

1. 15+ minutes of unexplained downtime

If your website remains offline for more than 15 minutes and you are unable to identify the root cause, escalate the issue immediately. This kind of outage may indicate deeper issues like hardware failure, routing breakdowns or system crashes.

Prolonged downtime affects users, traffic and your company’s credibility. Quick action can reduce the risk of lost data or missed opportunities. Your provider’s team can conduct more in-depth diagnostics and restore service more quickly than most internal teams.

2. Log files show critical errors or kernel panics

System logs often reveal what’s happening behind the scenes, but interpreting them can be a complex task. If you spot repeated kernel panics, fatal errors or unexplained service crashes, the issue likely exceeds standard troubleshooting.

These signals often point to operating system instability or misconfigured services. Without proper tools or expertise, resolution can take longer and lead to further failures. Escalating ensures the issue is handled before it affects critical workloads.

3. Signs of a security breach

Sudden spikes in traffic, unexpected DNS changes or unauthorized login attempts may signal a breach. These attacks can lead to data theft, malware injection or full system compromise if left unchecked.

Every minute counts when dealing with a potential security threat. Hosting support teams have tools to isolate the issue, scan for vulnerabilities and restore secure access. Escalating early reduces long-term risk and limits exposure to external threats. Keeping a site security checklist handy also helps strengthen protection.

4. Recurring instability or resource exhaustion

If your server continues to slow down, crash or consume excessive resources, even after restarts, you’re dealing with a persistent underlying issue. It could stem from misconfigured software, memory leaks or disk I/O conflicts.

When basic fixes are no longer effective, escalation is essential to prevent further system degradation. Hosting experts can investigate at the kernel or service level to pinpoint the cause. Delaying action increases the risk of full system failure or irreversible data loss.

5. Failure to restore from backup or rollback

Restoring from backups should be straightforward. However, if recovery attempts fail due to corrupted files, mismatched versions or broken configurations, don’t continue troubleshooting in isolation. Failed rollbacks can delay restoration and extend service outages.

Escalating in this case ensures data recovery is handled quickly and securely. Your provider can verify backup integrity, rebuild systems and help prevent the issue from happening again.

6. System-wide risk

If your server experiences widespread instability, across multiple services, databases or applications, it may signal a deeper infrastructure problem. Performance slowdowns, repeated timeouts or signs of compromise across your environment are red flags.

When the impact affects critical services or you suspect a broader vulnerability, escalate immediately. Hosting support can isolate the root cause, contain any threats and restore full system integrity before further damage occurs.

Recognizing when a problem is beyond internal resolution allows you to act quickly and minimize disruption. Escalating at the right time helps maintain server reliability and ensures your users continue to have uninterrupted access.

Final thoughts

Running a dedicated server gives you full control, but it also demands consistent oversight. Technical failures, outdated software or security gaps can cause serious disruptions without warning.

Preventive steps, like real-time monitoring and regular updates, help reduce common hosting issues that can interrupt site access or performance. And when something breaks, quick access to expert help keeps your site safe and your users connected.

At Bluehost, we focus on reliability, protection and long-term performance. Whether you’re scaling operations or troubleshooting dedicated servers, having access to expert help ensures continuous performance and security.

Want reliable, high-performance hosting? Start with Bluehost dedicated hosting and enjoy 99.9% uptime, proactive monitoring, and expert support. Bluehost offers automated backups, DDoS protection, and 24/7 support, so you can focus on your website while we handle the server side.

FAQs

Common server problems include slow performance, memory leaks and data transfer issues. These can result from outdated software, high resource usage or cyber attacks that cause data corruption, overload infrastructure and harm your company’s reputation if not resolved quickly.

Prevent hardware issues by monitoring system temperatures, replacing aging components and scheduling regular diagnostics. Early intervention avoids physical damage, supports your disaster recovery plan and reduces the risk of downtime or permanent data loss on your dedicated server environment.

It’s best to automate updates, backups and routine system checks. Manual maintenance increases the chances of missed steps or errors, which can lead to data loss. Automation improves reliability, reduces human mistakes and helps maintain consistent uptime and performance.

Use tools like Ping, Traceroute and Netstat to troubleshoot blocked access or delays. These help determine root causes of server issues. On virtual machines, built-in dashboards also provide real-time metrics on resource allocation and connection problems.

A remote access server allows inbound remote access calls from external devices so users can securely connect to internal resources. It plays a vital role in dedicated hosting troubleshooting by providing authorized access while keeping the server less vulnerable to attacks. Proper configuration ensures stability and Bluehost servers are designed with security-first access protocols.

A mail server manages the sending, receiving and storage of emails. It uses protocols like SMTP, IMAP and POP3 for message transfer. If the server is vulnerable due to poor configuration, businesses may face spam, phishing or downtime issues. Server troubleshooting ensures smooth communication.

Server configuration defines how hardware and software resources are set up to handle requests. Proper configuration avoids common server problems like poor resource allocation, failed updates or domain controller’s configuration errors. Optimized setup ensures scalability, efficiency and fewer server troubleshooting requirements in day-to-day operations.

Server failure is often linked to hardware failure, misconfigured systems or unpatched security flaws. Preventative measures like redundancy planning, disaster recovery strategies and proactive monitoring help solve server problems before downtime occurs. Regular updates and automated alerts reduce risks significantly.

Network issues such as packet loss, latency or bandwidth congestion can slow down server performance. These common server problems directly affect connectivity and response times. Troubleshooting server problems with tools like Traceroute and Netstat helps pinpoint bottlenecks and restore stability to hosting environments. Bluehost support teams use advanced monitoring tools to quickly detect and fix bottlenecks.

Common issues related to hardware include failing hard drives, overheating CPUs, faulty memory and power supply disruptions. In dedicated hosting, hardware failure can cause downtime or data corruption. Preventing these requires continuous monitoring and quick replacement of faulty components to minimize server issues.

Businesses often face server issues such as downtime, performance lags, security breaches and misconfigurations. Knowing how to solve server problems quickly through troubleshooting, monitoring and disaster recovery planning ensures operational continuity and reduces financial impact.

Security risks include malware, unauthorized access and DDoS attacks. A vulnerable server risks theft and downtime. Bluehost strengthens defenses with firewalls, intrusion detection and regular patching, forming the backbone of reliable server protection.

The physical layer handles the transmission of raw data over cables, switches and wireless systems. Faults here often cause connectivity-related server issues. Troubleshooting server problems at the physical layer such as checking cabling or hardware interfaces is essential before escalating to higher network layers.

Connectivity issues interrupt communication between users and servers, causing downtime. For businesses relying on dedicated hosting, knowing what to do if a server is down is crucial. Quick troubleshooting ensures minimal service disruption and helps solve server problems before they affect customers.

Common server problems are identified through monitoring tools, error logs and diagnostic commands. Once detected, troubleshooting server problems involves fixing misconfigurations, upgrading outdated hardware or applying security patches. This ensures dedicated hosting stability and better uptime for businesses. Understanding how to solve server problems reduces long outages. Clear action plans guide teams on what to do if server is down.

Yes. All Bluehost dedicated plans include built-in DDoS mitigation, WAF and 24/7 monitoring to prevent downtime. Our infrastructure is designed to absorb malicious traffic while keeping legitimate visitors unaffected. This ensures high uptime, faster response times and stronger overall security for mission-critical websites.

Contact your hosting provider, restore from the latest backup and run diagnostics on logs and resources. It’s also important to check firewall rules, recent updates or configuration changes that might have triggered the crash. Proactive monitoring and automated alerts can help identify issues earlier, reducing recovery time significantly.

Yes. Downtime and slow performance can reduce rankings. Bluehost SEO Tools help monitor and maintain site health. Search engines prioritize websites with consistent uptime and optimized performance. Regular monitoring, speed improvements and secure hosting ensure your site remains SEO-friendly and reliable.

Write A Comment